博客分类:

· MQ

本来是想做个翻译的,奈何英文太差,还是逐个的对zeroMQ各用法进行简析,文中代码主要来自pyzmq中的example,详细原文请自行参看这里,也不清楚有没有兄台做过类似工作,这里主要供自个儿学习备忘,如有谬误,欢迎指出~

简介:

ØMQ (ZeroMQ, 0MQ, zmq),这一堆表达方式看哪个顺眼就选哪个吧,都指的咱要讲的这玩意儿。

它出现的目的只有一个:更高效的利用机器。好吧,这是我个人的看法,官方说法是:让任何地方、任何代码可以互联。

应该很明白吧,如果非要做联想类比,好吧,可以想成经典的C/S模型,这个东东封装了所有底层细节,开发人员只要关注代码逻辑就可以了。(虽然联想成C/S,但可不仅仅如此哦,具体往下看)。

它的通信协议是AMQP,具体的Google之吧,在自由市场里,它有一个对头RabbitMQ,关于那只”兔子”,那又是另外一个故事了。

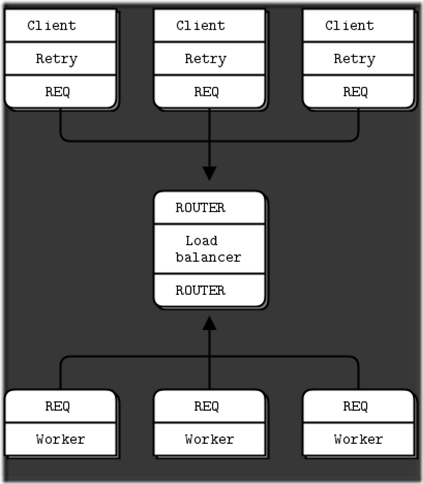

C/S模式:![clip_image001[1] clip_image001[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0011_thumb.png)

server

1. import zmq

2.

3. c = zmq.Context()

4. s = c.socket(zmq.REP)

5. #s.bind(‘tcp://127.0.0.1:10001’)

6. s.bind(‘ipc:///tmp/zmq’)

7.

8. while True:

9. msg = s.recv_pyobj()

10. s.send_pyobj(msg)

11. s.close()

client

1. import zmq

2.

3. c = zmq.Context()

4. s = c.socket(zmq.REQ)

5. #s.connect(‘tcp://127.0.0.1:10001’)

6. s.connect(‘ipc:///tmp/zmq’)

7. s.send_pyobj(‘hello’)

8. msg = s.recv_pyobj()

9. print msg

注意:

这个经典的模式在zeroMQ中是应答状态的,不能同时send多个数据,只能ababab这样。还有这里send_pyobj是pyzmq特有的,用以传递python的对象,通用的还是如同socket的send~

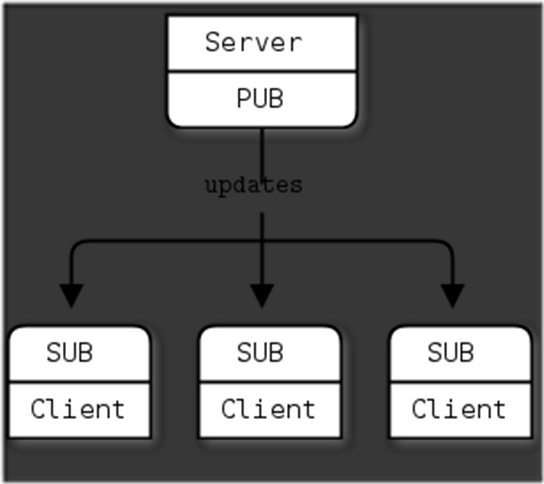

zeroMQ初体验-2.发布订阅模式(pub/sub)

博客分类:

· MQ

1. import itertools

2. import sys

3. import time

4.

5. import zmq

6.

7. def main():

8. if len (sys.argv) != 2:

9. print ‘usage: publisher <bind-to>’

10. sys.exit (1)

11.

12. bind_to = sys.argv[1]

13.

14. all_topics = [‘sports.general’,’sports.football’,’sports.basketball’,

15. ‘stocks.general’,’stocks.GOOG’,’stocks.AAPL’,

16. ‘weather’]

17.

18. ctx = zmq.Context()

19. s = ctx.socket(zmq.PUB)

20. s.bind(bind_to)

21.

22. print “Starting broadcast on topics:”

23. print ” %s” % all_topics

24. print “Hit Ctrl-C to stop broadcasting.”

25. print “Waiting so subscriber sockets can connect…”

26. print

27. time.sleep(1.0)

28.

29. msg_counter = itertools.count()

30. try:

31. for topic in itertools.cycle(all_topics):

32. msg_body = str(msg_counter.next())

33. print ‘ Topic: %s, msg:%s’ % (topic, msg_body)

34. #s.send_multipart([topic, msg_body])

35. s.send_pyobj([topic, msg_body])

36. # short wait so we don’t hog the cpu

37. time.sleep(0.1)

38. except KeyboardInterrupt:

39. pass

40.

41. print “Waiting for message queues to flush…”

42. time.sleep(0.5)

43. s.close()

44. print “Done.”

45.

46. if __name__ == “__main__”:

47. main()

订阅端(sub):

1. import sys

2. import time

3. import zmq

4.

5. def main():

6. if len (sys.argv) < 2:

7. print ‘usage: subscriber <connect_to> [topic topic …]’

8. sys.exit (1)

9.

10. connect_to = sys.argv[1]

11. topics = sys.argv[2:]

12.

13. ctx = zmq.Context()

14. s = ctx.socket(zmq.SUB)

15. s.connect(connect_to)

16.

17. # manage subscriptions

18. if not topics:

19. print “Receiving messages on ALL topics…”

20. s.setsockopt(zmq.SUBSCRIBE,”)

21. else:

22. print “Receiving messages on topics: %s …” % topics

23. for t in topics:

24. s.setsockopt(zmq.SUBSCRIBE,t)

25. print

26. try:

27. while True:

28. #topic, msg = s.recv_multipart()

29. topic, msg = s.recv_pyobj()

30. print ‘ Topic: %s, msg:%s’ % (topic, msg)

31. except KeyboardInterrupt:

32. pass

33. print “Done.”

34.

35. if __name__ == “__main__”:

36. main()

注意:

这里的发布与订阅角色是绝对的,即发布者无法使用recv,订阅者不能使用send,并且订阅者需要设置订阅条件”setsockopt”。

按照官网的说法,在这种模式下很可能发布者刚启动时发布的数据出现丢失,原因是用zmq发送速度太快,在订阅者尚未与发布者建立联系时,已经开始了数据发布(内部局域网没这么夸张的)。官网给了两个解决方案;1,发布者sleep一会再发送数据(这个被标注成愚蠢的);2,(还没有看到那,在后续中发现的话会更新这里)。

官网还提供了一种可能出现的问题:当订阅者消费慢于发布,此时就会出现数据的堆积,而且还是在发布端的堆积(有朋友指出是堆积在消费端,或许是新版本改进,需要读者的尝试和反馈,thx!),显然,这是不可以被接受的。至于解决方案,或许后面的”分而治之”就是吧。

zeroMQ初体验-3.分而治之模式(push/pull)

博客分类:

· MQ

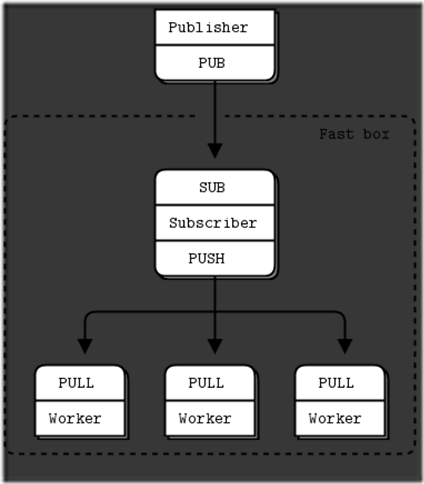

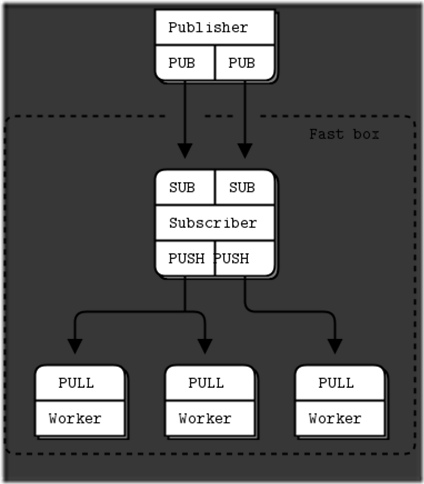

push/pull模式:![clip_image004[1] clip_image004[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0041_thumb.png)

模型描述:

1.上游(任务发布)

2.工人(中间,具体工作)

3.下游(信号采集或者工作结果收集)

上游代码:

1. import zmq

2. import random

3. import time

4.

5. context = zmq.Context()

6.

7. # Socket to send messages on

8. sender = context.socket(zmq.PUSH)

9. sender.bind(“tcp://*:5557”)

10.

11. print “Press Enter when the workers are ready: ”

12. _ = raw_input()

13. print “Sending tasks to workers…”

14.

15. # The first message is “0” and signals start of batch

16. sender.send(‘0’)

17.

18. # Initialize random number generator

19. random.seed()

20.

21. # Send 100 tasks

22. total_msec = 0

23. for task_nbr in range(100):

24. # Random workload from 1 to 100 msecs

25. workload = random.randint(1, 100)

26. total_msec += workload

27. sender.send(str(workload))

28. print “Total expected cost: %s msec” % total_msec

工作代码:

1. import sys

2. import time

3. import zmq

4.

5. context = zmq.Context()

6.

7. # Socket to receive messages on

8. receiver = context.socket(zmq.PULL)

9. receiver.connect(“tcp://localhost:5557”)

10.

11. # Socket to send messages to

12. sender = context.socket(zmq.PUSH)

13. sender.connect(“tcp://localhost:5558”)

14.

15. # Process tasks forever

16. while True:

17. s = receiver.recv()

18.

19. # Simple progress indicator for the viewer

20. sys.stdout.write(‘.’)

21. sys.stdout.flush()

22.

23. # Do the work

24. time.sleep(int(s)*0.001)

25.

26. # Send results to sink

27. sender.send(”)

下游代码:

1. import sys

2. import time

3. import zmq

4.

5. context = zmq.Context()

6.

7. # Socket to receive messages on

8. receiver = context.socket(zmq.PULL)

9. receiver.bind(“tcp://*:5558”)

10.

11. # Wait for start of batch

12. s = receiver.recv()

13.

14. # Start our clock now

15. tstart = time.time()

16.

17. # Process 100 confirmations

18. total_msec = 0

19. for task_nbr in range(100):

20. s = receiver.recv()

21. if task_nbr % 10 == 0:

22. sys.stdout.write(‘:’)

23. else:

24. sys.stdout.write(‘.’)

25.

26. # Calculate and report duration of batch

27. tend = time.time()

28. print “Total elapsed time: %d msec” % ((tend-tstart)*1000)

注意点:

这种模式与pub/sub模式一样都是单向的,区别有两点:

1,该模式下在没有消费者的情况下,发布者的信息是不会消耗的(由发布者进程维护)

2,多个消费者消费的是同一列信息,假设A得到了一条信息,则B将不再得到

这种模式主要针对在消费者能力不够的情况下,提供的多消费者并行消费解决方案(也算是之前的pub/sub模式的那个”堵塞问题”的一个解决策略吧)

由上面的模型图可以看出,这是一个N:N的模式,在1:N的情况下,各消费者并不是平均消费的,而在N:1的情况下,则有所不同,如下图:![clip_image005[1] clip_image005[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0051_thumb.png)

这种模式主要关注点在于,可以扩展中间worker,来到达并发的目的。

zeroMQ初体验-4.传教(为什么要用ZeroMQ?)

博客分类:

· MQ

既然是读书笔记,按照顺序,原作者在这里”唠嗑”了一大堆Why,看的着实有些热血沸腾,幸而也是他的对头”兔子”的簇拥,略带客观的说说:

zeroMQ从某种层面上甚至都不能称为软件(或许连工具都称不上)。它只是一套组件,封装了复杂的网络、进程等连接环境,向上提供API,由各个语言编写对应类库,正式运用环境是通过调用对应的类库来实现的。

从自由的角度来说,它是去中心化的,自由度极高;而从安全稳固的角度来看,则是有着无法避免的缺憾。(原本就没有完美)

额,有点偏了。

0mq中的0从”零延迟”衍生为:零成本、零浪费、零管理,奉行简约主义的哲学。

你是否还在为网络编程中,为繁复的socket策略而纠结?甚或应此放弃或者逃避网络化设计?现在,ZeroMQ这个新世纪网络编程的福音出现了,不再需要拘泥于底层连接的策略问题,全部交给zeroMQ吧,专注于你所专注,网络编程就是这么简单~

友情提示:

代码规范别忘记

zeroMQ初体验-5.高级教程初涉

博客分类:

· MQ

诸位在前面的例子中,已经可以发现所有的关系都是成对匹配出现的。

之前已经使用的几种模式:

req/rep(请求答复模式):主要用于远程调用及任务分配等。

pub/sub(订阅模式):主要用于数据分发。

push/pull(管道模式):主要用于多任务并行。

除此之外,还有一种模式,是因为大多数人还么有从”TCP”传统模式思想转变过来,习惯性尝试的独立成对模式(1to1).这个在后面会有介绍。

ZeroMQ内置的有效绑定对:

· PUB and SUB

· REQ and REP

· REQ and XREP

· XREQ and REP

· XREQ and XREP

· XREQ and XREQ

· XREP and XREP

· PUSH and PULL

· PAIR and PAIR

非正常匹配会出现意料之外的问题(未必报错,但可能数据不通路什么的,官方说法是未来可能会有统一错误提示吧),未来还会有更高层次的模式(当然也可以自己开发)。

由于zeroMQ的发送机制,发送到数据有两种状态(是否Copy),在非Copy下,一旦发送成功,发送端将不再能访问到该数据,Copy状态则可以(主要用于重复发送)。还有就是所发送的信息都是保持在内存,故不能随意发送大数据(以防溢出),推荐的做法是拆分逐个发送。(python中的单条信息限制为4M.)

–补充:

这样的发送需要额外标识ZMQ_SNDMORE,在接收端可以通过ZMQ_RCVMORE来判定。

号外!

官方似乎野心勃勃啊,想将zeroMQ加入到Linux kernel,若真做到可就了不得了。

zeroMQ初体验-6.多模式数据来源处理方案(multi sockets)

博客分类:

· MQ

之前已经讲过,zeroMQ是可以多对多的,但需要成对匹配才行,即多个发布端都是同一种模式,而这里要涉及到的是,多个发布端模式不统一的情况。

文中先给出了一个比较”脏”的处理方式:

1. import zmq

2. import time

3.

4. context = zmq.Context()

5.

6. receiver = context.socket(zmq.PULL)

7. receiver.connect(“tcp://localhost:5557”)

8.

9. subscriber = context.socket(zmq.SUB)

10. subscriber.connect(“tcp://localhost:5556”)

11. subscriber.setsockopt(zmq.SUBSCRIBE, “10001”)

12.

13. while True:

14.

15. while True:

16. try:

17. rc = receiver.recv(zmq.NOBLOCK)#这是非阻塞模式

18. except zmq.ZMQError:

19. break

20.

21. while True:

22. try:

23. rc = subscriber.recv(zmq.NOBLOCK)

24. except zmq.ZMQError:

25. break

显然,如此做既不优雅,还有出现单来源循环不止,另一来源又得不到响应的状况。

自然,官方也做了相应的封装,给了一个相对优雅的实现:

1. import zmq

2.

3. context = zmq.Context()

4.

5. receiver = context.socket(zmq.PULL)

6. receiver.connect(“tcp://localhost:5557”)

7.

8. subscriber = context.socket(zmq.SUB)

9. subscriber.connect(“tcp://localhost:5556”)

10. subscriber.setsockopt(zmq.SUBSCRIBE, “10001”)

11.

12. poller = zmq.Poller()

13. poller.register(receiver, zmq.POLLIN)

14. poller.register(subscriber, zmq.POLLIN)

15.

16. while True:

17. socks = dict(poller.poll())

18.

19. if receiver in socks and socks[receiver] == zmq.POLLIN:

20. message = receiver.recv()

21.

22. if subscriber in socks and socks[subscriber] == zmq.POLLIN:

23. message = subscriber.recv()

这种方式采用了平衡兼顾的原则,实现了类似于同一模式多发布端推送的”平衡队列”功能。

zeroMQ初体验-7.优雅的卸载工作进程

博客分类:

· MQ

关掉一个进程有很多种方式,而在ZeroMQ中则推崇通过使用信号通知,可控的卸载、关闭进程。在这里,要援引之前的”分而治之”例子(具体可以见这里)。

例图:![clip_image006[1] clip_image006[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0061_thumb.png)

显然,信号发送是由能够掌握整个进度的”水槽”(下游)来控制,在原有基础上做少许变更即可。

Worker(数据处理):

1. import sys

2. import time

3. import zmq

4.

5. context = zmq.Context()

6.

7. receiver = context.socket(zmq.PULL)

8. receiver.connect(“tcp://localhost:5557”)

9.

10. sender = context.socket(zmq.PUSH)

11. sender.connect(“tcp://localhost:5558”)

12.

13. controller = context.socket(zmq.SUB)

14. controller.connect(“tcp://localhost:5559”)

15. controller.setsockopt(zmq.SUBSCRIBE, “”)

16.

17. poller = zmq.Poller()

18. poller.register(receiver, zmq.POLLIN)

19. poller.register(controller, zmq.POLLIN)

20. while True:

21. socks = dict(poller.poll())

22.

23. if socks.get(receiver) == zmq.POLLIN:

24. message = receiver.recv()

25.

26. workload = int(message) # Workload in msecs

27. time.sleep(workload / 1000.0)

28. sender.send(message)

29.

30. sys.stdout.write(“.”)

31. sys.stdout.flush()

32.

33. if socks.get(controller) == zmq.POLLIN:

34. break

水槽(下游):

1. import sys

2. import time

3. import zmq

4.

5. context = zmq.Context()

6.

7. receiver = context.socket(zmq.PULL)

8. receiver.bind(“tcp://*:5558”)

9.

10. controller = context.socket(zmq.PUB)

11. controller.bind(“tcp://*:5559”)

12.

13. receiver.recv()

14.

15. tstart = time.time()

16.

17. for task_nbr in xrange(100):

18. receiver.recv()

19. if task_nbr % 10 == 0:

20. sys.stdout.write(“:”)

21. else:

22. sys.stdout.write(“.”)

23. sys.stdout.flush()

24.

25. tend = time.time()

26. tdiff = tend – tstart

27. total_msec = tdiff * 1000

28. print “Total elapsed time: %d msec” % total_msec

29.

30. controller.send(“KILL”)

31. time.sleep(1)

注意:

正常情况下,即使进程被关闭,可能端口并没有被清除(那是有ZeroMQ维护的),原文中调用了这么两句

zmq_close (server)

zmq_term (context)

python中对应为zmq.close(),zmq.term(),不过python的垃圾回收会替俺们解决后顾之忧的~

zeroMQ初体验-8.内存泄漏了?

博客分类:

· MQ

写过”永不停歇”的代码的兄弟应该都或多或少遇到或考虑到内存溢出之类的问题,那么,在ZeroMQ的应用中,又如何处理如是情况?

文中给出了类C这种需要自行管理内存的解决方案(虽然python的GC很强大,不过,关注下总没有坏处):

这里运用到了这个工具:valgrind

为了避免zeromq中的一些warning的干扰,首先需要重新build下zermq

· $ cd zeromq

· $ export CPPFLAGS=-DZMQ_MAKE_VALGRIND_HAPPY

· $ ./configure

· $ make clean; make

· $ sudo make install

然后:

valgrind –tool=memcheck –leak-check=full someprog

由此帮助,通过修正代码,应该可以得到如下令人愉快的信息:

==30536== ERROR SUMMARY: 0 errors from 0 contexts…

似乎这是技巧章了,与ZeroMQ关联度不是太大啊,读书笔记嘛,书上写了,就记录下,学习下。

zeroMQ初体验-9.优雅的扩展(代理模式)

博客分类:

· MQ

前面所谈到的网络拓扑结构都是这样的:![clip_image007[1] clip_image007[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0071_thumb.png)

而在实际的应用中,绝大多数会出现这样的结构要求:![clip_image008[1] clip_image008[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0081_thumb.png)

zeroMQ中自然也提供了这样的需求案例:

1.发布/订阅 代理模式:

1. import zmq

2.

3. context = zmq.Context()

4.

5. frontend = context.socket(zmq.SUB)

6. frontend.connect(“tcp://192.168.55.210:5556”)

7.

8. backend = context.socket(zmq.PUB)

9. backend.bind(“tcp://10.1.1.0:8100”)

10.

11. frontend.setsockopt(zmq.SUBSCRIBE, ”)

12.

13. while True:

14. while True:

15. message = frontend.recv()

16. more = frontend.getsockopt(zmq.RCVMORE)

17. if more:

18. backend.send(message, zmq.SNDMORE)

19. else:

20. backend.send(message)

21. break # Last message part

注意代码,这个代理是支持大数据多包发送的。这个proxy实现了下图:![clip_image009[1] clip_image009[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0091_thumb.png)

2.请求/答复 代理模式:

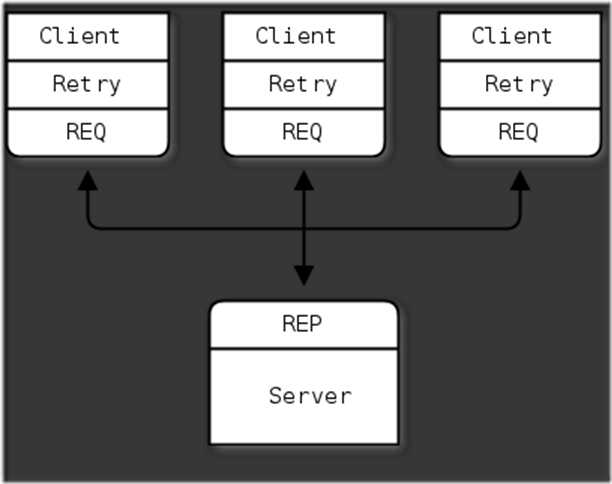

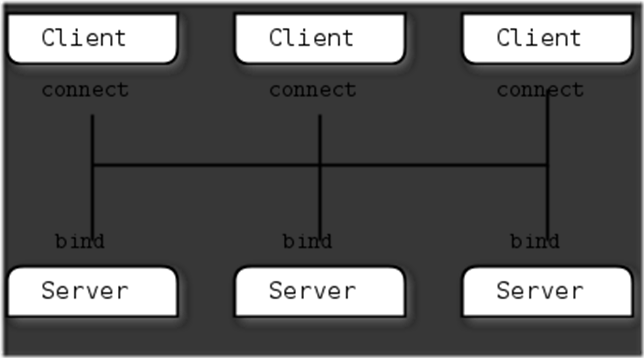

因为zeroMQ天然支持”多对多”,所以看似不需要代理啊,如下图:![clip_image010[1] clip_image010[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0101_thumb.png)

不过,这样会有一个问题,客户端需要知道所有的服务地址,并且在服务地址出现变迁时,需要通知客户端,这样迁移扩展的复杂度将无法预估。故,需要实现下图:![clip_image011[1] clip_image011[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0111_thumb.png)

客户端:

1. import zmq

2.

3. context = zmq.Context()

4. socket = context.socket(zmq.REQ)

5. socket.connect(“tcp://localhost:5559”)

6.

7. for request in range(1,10):

8. socket.send(“Hello”)

9. message = socket.recv()

10. print “Received reply “, request, “[“, message, “]”

服务器端:

1. import zmq

2.

3. context = zmq.Context()

4. socket = context.socket(zmq.REP)

5. socket.connect(“tcp://localhost:5560”)

6.

7. while True:

8. message = socket.recv()

9. print “Received request: “, message

10. socket.send(“World”)

代理端:

1. import zmq

2.

3. context = zmq.Context()

4. frontend = context.socket(zmq.XREP)

5. backend = context.socket(zmq.XREQ)

6. frontend.bind(“tcp://*:5559”)

7. backend.bind(“tcp://*:5560”)

8.

9. poller = zmq.Poller()

10. poller.register(frontend, zmq.POLLIN)

11. poller.register(backend, zmq.POLLIN)

12.

13. while True:

14. socks = dict(poller.poll())

15.

16. if socks.get(frontend) == zmq.POLLIN:

17. message = frontend.recv()

18. more = frontend.getsockopt(zmq.RCVMORE)

19. if more:

20. backend.send(message, zmq.SNDMORE)

21. else:

22. backend.send(message)

23.

24. if socks.get(backend) == zmq.POLLIN:

25. message = backend.recv()

26. more = backend.getsockopt(zmq.RCVMORE)

27. if more:

28. frontend.send(message, zmq.SNDMORE)

29. else:

30. frontend.send(message)

上面的代码组成了下面的网络结构:![clip_image012[1] clip_image012[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0121_thumb.png)

客户端与服务器端互相透明,世界一下清净了…

这节上了好多图和代码,绝对都是干货。不过,既然0mq已经想到了,为毛还要咱自己写代理捏?So,虽然上面的都是干货,或许,马上,就可以统统忘掉了。下面,展示下0mq自带的代理方案:

1. import zmq

2.

3. def main():

4. context = zmq.Context(1)

5.

6. frontend = context.socket(zmq.XREP)

7. frontend.bind(“tcp://*:5559”)

8.

9. backend = context.socket(zmq.XREQ)

10. backend.bind(“tcp://*:5560”)

11.

12. zmq.device(zmq.QUEUE, frontend, backend)

13.

14. frontend.close()

15. backend.close()

16. context.term()

17.

18. if __name__ == “__main__”:

19. main()

这是应答模式的代理,官方提供了三种标准代理:

应答模式:queue XREP/XREQ

订阅模式:forwarder SUB/PUB

分包模式:streamer PULL/PUSH

特别提醒:

官方可不推荐代理混搭,不然责任自负。按照官方的说法,既然要混搭,还是自个儿写代理比较靠谱~

zeroMQ初体验-10.优雅的使用多线程

博客分类:

· MQ

“或许,ZeroMQ是最好的多线程运行环境!”官网如是说。

其实它想要支持的是那种类似erlang信号模式。传统多线程总会伴随各种”锁”出现各种稀奇古怪的问题。而zeroMQ的多线程致力于”去锁化”,简单来说,一条数据在同一时刻只允许被一个线程持有(而传统的是:只允许被一个线程操作)。而锁,是因为可能会出现的多线程同时操作一条数据才出现的副产品。从这里就可以很清晰的看出zeromq的切入点了,通过线程间的数据流动来保证同一时刻任何数据都只会被一个线程持有。

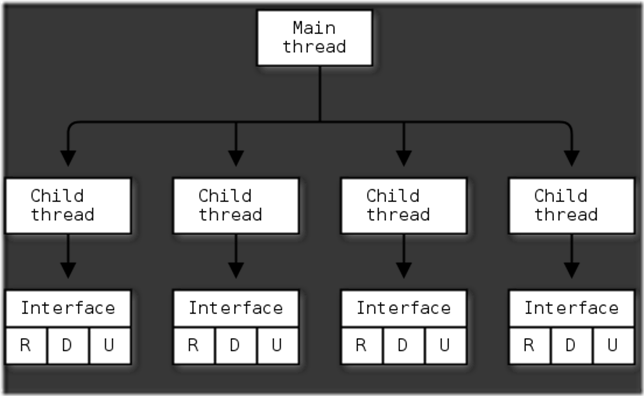

这里给出传统的应答模式的例子:![clip_image013[1] clip_image013[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0131_thumb.png)

1. import time

2. import threading

3. import zmq

4.

5. def worker_routine(worker_url, context):

6.

7. socket = context.socket(zmq.REP)

8.

9. socket.connect(worker_url)

10.

11. while True:

12.

13. string = socket.recv()

14. print(“Received request: [%s]\n” % (string))

15. time.sleep(1)

16.

17. socket.send(“World”)

18.

19. def main():

20. url_worker = “inproc://workers”

21. url_client = “tcp://*:5555”

22.

23. context = zmq.Context(1)

24.

25. clients = context.socket(zmq.XREP)

26. clients.bind(url_client)

27.

28. workers = context.socket(zmq.XREQ)

29. workers.bind(url_worker)

30.

31. for i in range(5):

32. thread = threading.Thread(target=worker_routine, args=(url_worker, context, ))

33. thread.start()

34.

35. zmq.device(zmq.QUEUE, clients, workers)

36.

37. clients.close()

38. workers.close()

39. context.term()

40.

41. if __name__ == “__main__”:

42. main()

这样的切分还有一个隐性的好处,万一要从多线程转为多进程,可以非常容易的把代码切割过来再利用。

这里还给了一个用多线程不用多进程的理由:

进程开销太大了(话说,python是鼓励多进程替代线程的)。

上面代码给出的例子似乎没有子线程间的通信啊?既然支持用多线程,自然不会忘了这个:![clip_image014[1] clip_image014[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0141_thumb.png)

1. import threading

2. import zmq

3.

4. def step1(context):

5. sender = context.socket(zmq.PAIR)

6. sender.connect(“inproc://step2”)

7. sender.send(“”)

8.

9. def step2(context):

10. receiver = context.socket(zmq.PAIR)

11. receiver.bind(“inproc://step2”)

12.

13. thread = threading.Thread(target=step1, args=(context, ))

14. thread.start()

15.

16. string = receiver.recv()

17.

18. sender = context.socket(zmq.PAIR)

19. sender.connect(“inproc://step3”)

20. sender.send(“”)

21.

22. return

23.

24. def main():

25. context = zmq.Context(1)

26.

27. receiver = context.socket(zmq.PAIR)

28. receiver.bind(“inproc://step3”)

29.

30. thread = threading.Thread(target=step2, args=(context, ))

31. thread.start()

32.

33. string = receiver.recv()

34.

35. print(“Test successful!\n”)

36.

37. receiver.close()

38. context.term()

39.

40. return

41.

42. if __name__ == “__main__”:

43. main()

注意:

这里用到了一个新的端口类型:PAIR。专门为进程间通信准备的(文中还列了下为神马么用之前已经出现过的类型比如应答之类的)。这种类型及时,可靠,安全(进程间其实也是可以用的,与应答相似)。

zeroMQ初体验-11.节点间的协作

博客分类:

· MQ

上一篇讲到了线程间的协作,通过zeroMQ的pair模式可以很优雅的实现。而在各节点间(进程级),则适用度不高(虽然也能用)。这里给出了两个理由:

1.节点间是可以调节的,而线程间不是(线程是稳定的),pair模式是非自动连接的.

2.线程数是固定的,可预估的。而节点则是变动、不可预估的。

由此得出结论:pair适用于稳定、可控的环境。

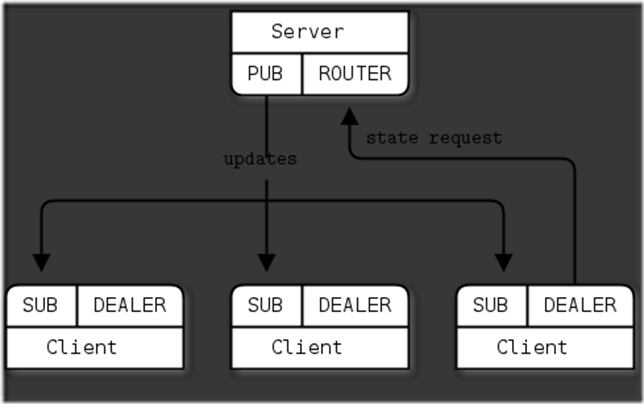

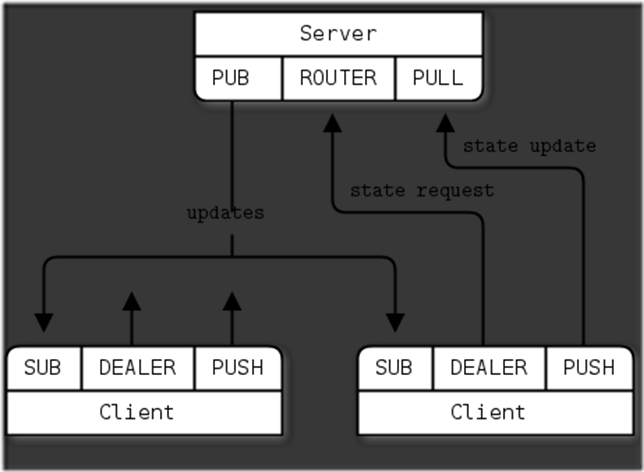

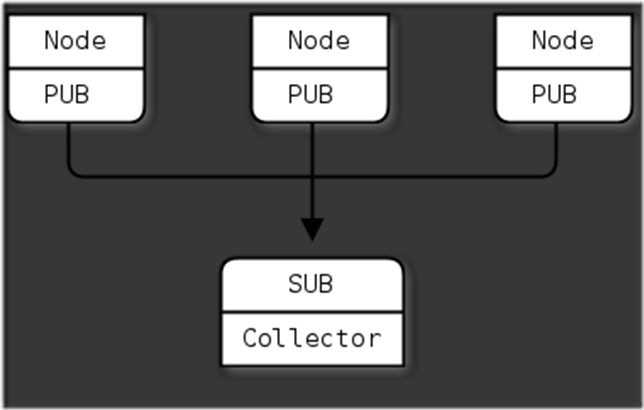

所以,有了本章节。不知诸位还记得前面所讲的发布/订阅模式,在那里曾说过这种模式是不太稳定的(主要是指初始阶段),容易在连接未建立前就发布、废弃部分数据。在这里,通过节点间的协作来解决那个难题。

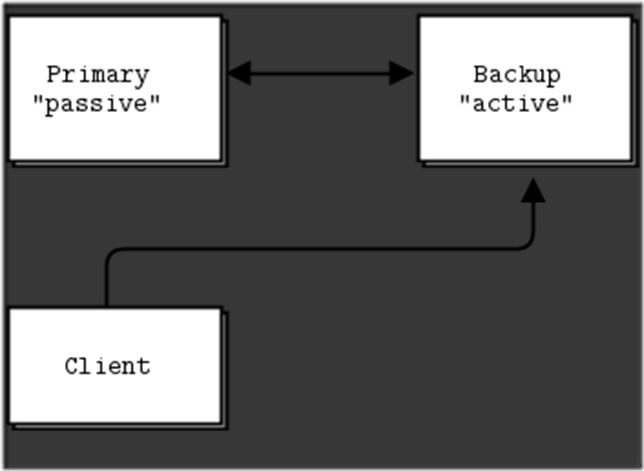

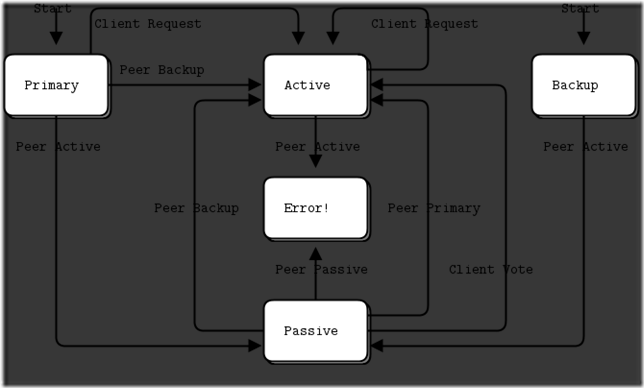

模型图:![clip_image015[1] clip_image015[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0151_thumb.png)

发布端:

1. import zmq

2.

3. SUBSCRIBERS_EXPECTED = 2

4.

5. def main():

6. context = zmq.Context()

7.

8. publisher = context.socket(zmq.PUB)

9. publisher.bind(‘tcp://*:5561’)

10.

11. syncservice = context.socket(zmq.REP)

12. syncservice.bind(‘tcp://*:5562’)

13.

14. subscribers = 0

15. while subscribers < SUBSCRIBERS_EXPECTED:

16. msg = syncservice.recv()

17. syncservice.send(”)

18. subscribers += 1

19. print “+1 subscriber”

20.

21. for i in range(1000000):

22. publisher.send(‘Rhubarb’);

23.

24. publisher.send(‘END’)

25.

26. if name == ’main’:

27. main()

订阅端:

1. import zmq

2.

3. def main():

4. context = zmq.Context()

5.

6. subscriber = context.socket(zmq.SUB)

7. subscriber.connect(‘tcp://localhost:5561’)

8. subscriber.setsockopt(zmq.SUBSCRIBE, ””)

9.

10. syncclient = context.socket(zmq.REQ)

11. syncclient.connect(‘tcp://localhost:5562’)

12.

13. syncclient.send(”)

14.

15. syncclient.recv()

16.

17. nbr = 0

18. while True:

19. msg = subscriber.recv()

20. if msg == ’END’:

21. break

22. nbr += 1

23.

24. print ‘Received %d updates’ % nbr

25.

26. if name == ’main’:

27. main()

由上例可见,通过应答模式解决了之前的困扰,如果还不放心的话,也可以通过发布特定参数,当订阅端得到时再应答,安全系数便又升了一级。不过这里有个大前提,得先通过某种方式得到或预估一个概念数来确保应用的可用性。

zeroMQ初体验-12.安全与稳定

博客分类:

· MQ

可能绝大多数接触zeromq的人都会对其去中心的自由感到满意,同时却又对数据传输的可靠性产生怀疑甚至沮丧(如果恰巧你也知道”兔子”的话)。

在这里,或许可以为此作出一些弥补,增强诸位使用它的信心。

zeromq之所以传输的速度无以伦比,它的”zero copy”功不可没,在这种机制下,减少了数据的二次缓存和挪动,并且减少了通讯间的应答式回应。不过在快速的同时,也降低了数据传递的可靠性。而打开copy机制,则在牺牲一定速度的代价下提升了其稳定性。

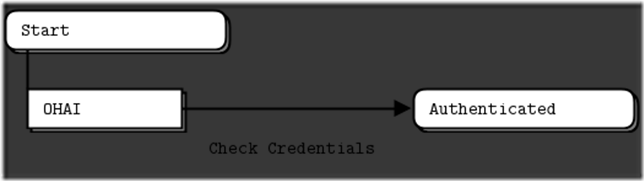

除了zero-copy机制外,zeromq还提供了一种命名机制,用以建立所谓的”Durable Sockets”。从之前的章节中已知,数据传输层面的事情已经由zeromq接管,那么在 “Durable Sockets”下,即使你的程序崩溃,或者因为其他原因导致节点丢失(挂掉?)zeromq会适当的为节点存储数据,以便当节点重新连上时,可以获取之前的数据

未启用命名机制时:![clip_image016[1] clip_image016[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0161_thumb.png)

启用后:![clip_image017[1] clip_image017[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0171_thumb.png)

相关设置:

1. zmq_setsockopt (socket, ZMQ_IDENTITY, ”Lucy”, 4);

注意:

1.如果要启用命名机制,必须在连接前设定名字。

2.不要重名!

3.在连接建立后不要再修改名字。

4.最好不要随机命名。

5.如果需要获知消息来源的名字,需要在消息发送时附加上(xrep会自动获取)名字。

zeroMQ初体验-13.发布/订阅模式 进阶

博客分类:

· MQ

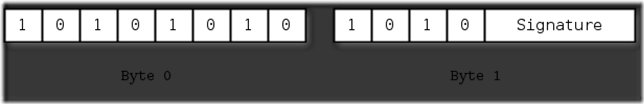

前面章节有介绍过当传输大数据时,建议分拆成多个小数据逐个发送,以防单条数据过大引发内存溢出等问题。同样的,这也适用于 发布订阅模式,这里用到了一个新名词:信封。

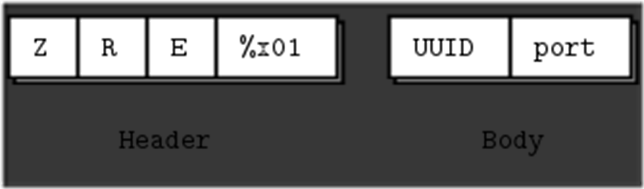

这种封装的数据结构看起来是这样的:![clip_image018[1] clip_image018[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0181_thumb.png)

由于key的关系,不用担心出现 被分拆为多份的数据只被对应订阅方部分持有 这种尴尬的局面。

发布端:

1. import time

2. import zmq

3.

4. def main():

5.

6. context = zmq.Context(1)

7. publisher = context.socket(zmq.PUB)

8. publisher.bind(“tcp://*:5563”)

9.

10. while True:

11. publisher.send_multipart([“A”, ”We don’t want to see this”])

12. publisher.send_multipart([“B”, ”We would like to see this”])

13. time.sleep(1)

14.

15. publisher.close()

16. context.term()

17.

18. if name == ”main”:

19. main()

订阅端:

1. import zmq

2.

3. def main():

4.

5. context = zmq.Context(1)

6. subscriber = context.socket(zmq.SUB)

7. subscriber.connect(“tcp://localhost:5563”)

8. subscriber.setsockopt(zmq.SUBSCRIBE, ”B”)

9.

10. while True:

11. [address, contents] = subscriber.recv_multipart()

12. print(“[%s] %s\n” % (address, contents))

13.

14. subscriber.close()

15. context.term()

16.

17. if name == ”main”:

18. main()

zeroMQ初体验-14.命名机制 进阶

博客分类:

· MQ

前文曾提到过命名机制,事实上它是一把双刃剑。在能够持有数据等待重新连接的时候,也增加了持有数据方的负担(危险),特别是在”发布/订阅”模式下,可谓牵一发而动全身。

这里先给出一组示例,在代码的运行过程中,通过重启消费者来观察发布者的进程状态。

发布端:

1. import zmq

2. import time

3.

4. context = zmq.Context()

5.

6. sync = context.socket(zmq.PULL)

7. sync.bind(“tcp://*:5564”)

8.

9. publisher = context.socket(zmq.PUB)

10. publisher.bind(“tcp://*:5565”)

11.

12. sync_request = sync.recv()

13.

14. for n in xrange(10):

15. msg = ”Update %d” % n

16. publisher.send(msg)

17. time.sleep(1)

18.

19. publisher.send(“END”)

20. time.sleep(1) # Give 0MQ/2.0.x time to flush output

订阅端:

1. import zmq

2. import time

3.

4. context = zmq.Context()

5.

6. subscriber = context.socket(zmq.SUB)

7. subscriber.setsockopt(zmq.IDENTITY, ”Hello”)

8. subscriber.setsockopt(zmq.SUBSCRIBE, ””)

9. subscriber.connect(“tcp://localhost:5565”)

10.

11. sync = context.socket(zmq.PUSH)

12. sync.connect(“tcp://localhost:5564”)

13. sync.send(“”)

14.

15. while True:

16. data = subscriber.recv()

17. print data

18. if data == ”END”:

19. break

订阅端得到的信息:

1. $ durasub

2. Update 0

3. Update 1

4. Update 2

5. ^C

6. $ durasub

7. Update 3

8. Update 4

9. Update 5

10. Update 6

11. Update 7

12. ^C

13. $ durasub

14. Update 8

15. Update 9

16. END

数据被发布者存储了,而发布者的内存占用也节节升高(很危险啊)。所以是否使用命名策略是需要谨慎选择的。为了以防万一,zeromq也提供了”高水位”机制,即当发送端持有数据达到一定数量就不再存储后面的数据,很好的控制了风险。这个机制也适当解决了这里的慢消费问题。

使用了 高水位 后的测试结果:

1. $ durasub

2. Update 0

3. Update 1

4. ^C

5. $ durasub

6. Update 2

7. Update 3

8. Update 7

9. Update 8

10. Update 9

11. END

“高水位”封堵了内存崩溃的可能性,却是以数据丢失为代价的,zeromq也为此配对提供了”swap”功能,将内存中的数据转存入硬盘,实现了”既不耗内存又不丢数据”。

实现代码:

1. import zmq

2. import time

3.

4. context = zmq.Context()

5.

6. # Subscriber tells us when it’s ready here

7. sync = context.socket(zmq.PULL)

8. sync.bind(“tcp://*:5564”)

9.

10. # We send updates via this socket

11. publisher = context.socket(zmq.PUB)

12. publisher.bind(“tcp://*:5565”)

13.

14. # Prevent publisher overflow from slow subscribers

15. publisher.setsockopt(zmq.HWM, 1)

16.

17. # Specify the swap space in bytes, this covers all subscribers

18. publisher.setsockopt(zmq.SWAP, 25000000)

19.

20. # Wait for synchronization request

21. sync_request = sync.recv()

22.

23. # Now broadcast exactly 10 updates with pause

24. for n in xrange(10):

25. msg = ”Update %d” % n

26. publisher.send(msg)

27. time.sleep(1)

28.

29. publisher.send(“END”)

30. time.sleep(1) # Give 0MQ/2.0.x time to flush output

注意点:

高水位与交换区的设定,是需要根据实际运用状态来确定的,高水位设的过小,会影响到速度。

如果是数据存储端崩溃了,那么,所有数据将彻底消失。

关于高水位的特别说明:

除了PUB型会在达到高水位丢弃后续数据外,其他类型的都会以阻塞的形式来应对后续数据。

线程间的通信,高水位是通信双方共同设置的总和,如果有一方没有设置,则高水位规则不会起到作用。

zeroMQ初体验-15.应答模式进阶(一)-数据的封装

博客分类:

· MQ

整整一大章全部讲的应答模式的进阶,应该很重要吧(简直是一定的)。

上一节讲到了发布/订阅模式 关于封装的话题,在应答模式中也是如此,不过这个动作已经被底层(zeromq)接管,对应用透明。而其中普通模式与X模式又有区别,例如:req连接Xrep:![clip_image020[1] clip_image020[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0201_thumb.png)

说明:

第三部分是实际发送的数据

第二部分是REQ向XREP发送请求时底层附加的

第一部分是XREP自身地址

注意:

前文已经说过,XREP其实用以平衡负载,所以这里由它对请求数据做了封装操作,如果通过多个XREP,数据结构就会变成这个样子:![clip_image021[1] clip_image021[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0211_thumb.png)

同时,如果没有启用命名机制,XREP会自动赋予临时名字:![clip_image022[1] clip_image022[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0221_thumb.png)

不然,就是这样了:![clip_image023[1] clip_image023[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0231_thumb.png)

这里给出一个验证代码:

1. import zmq

2. import zhelpers

3.

4. context = zmq.Context()

5.

6. sink = context.socket(zmq.XREP)

7. sink.bind(“inproc://example”)

8.

9. # First allow 0MQ to set the identity

10. anonymous = context.socket(zmq.XREQ)

11. anonymous.connect(“inproc://example”)

12. anonymous.send(“XREP uses a generated UUID”)

13. zhelpers.dump(sink)

14.

15. # Then set the identity ourself

16. identified = context.socket(zmq.XREQ)

17. identified.setsockopt(zmq.IDENTITY, ”Hello”)

18. identified.connect(“inproc://example”)

19. identified.send(“XREP socket uses REQ’s socket identity”)

20. zhelpers.dump(sink)

上面的代码用到的zhelpers:

1. from random import randint

2.

3. import zmq

4.

5.

6. # Receives all message parts from socket, prints neatly

7. def dump(zsocket):

8. print “—————————————-”

9. for part in zsocket.recv_multipart():

10. print “[%03d]” % len(part),

11. if all(31 < ord(c) < 128 for c in part):

12. print part

13. else:

14. print “”.join(“%x” % ord(c) for c in part)

15.

16.

17. # Set simple random printable identity on socket

18. def set_id(zsocket):

19. identity = “%04x-%04x” % (randint(0, 0x10000), randint(0, 0x10000))

20. zsocket.setsockopt(zmq.IDENTITY, identity)

zeroMQ初体验-16.应答模式进阶(二)-定制路由1

博客分类:

· MQ

在上一节中已经提到XREP主要工作是包装数据,打上标记以便方便的传递数据。那么,换个角度来看,这不就是路由么!其实在优雅的扩展中有介绍过。在这里针对XREP模式做深入的探索。

首先,得要理一下其中几种类型的差别(相似的名字真是坑爹啊):

REQ,官网称之为”老妈类型”,因为它负责主动提出请求,并且要求得到答复(严格同步的)

REP,”老爸类型”,负责应答请求,(从不主动,也是严格同步的)

XREQ,”分销类型”,负责对进出的数据排序,均匀的分发给接入的REP或者XREP

XREP,”路由类型”,将信息转发至任何与他有连接的地方,可以和任何类型相连,不过看起来,天然的和老妈比较亲密。

传统的看法是,应答模式自然得同步的。不过在这里,显然是可以做到异步的(只要”老爸”或者”老妈”不处在整个线路的中间位置).

通常定制路由会用到以下四种通迅连接:

XREP-to-XREQ.

XREP-to-REQ.

XREP-to-REP.

XREP-to-XREP.

在这几种基本连接下,定制路由完全看各人的想象力了。不过在即将到来的各种通讯的详解前,还是得要申明一下:

自定义路由有风险,使用需谨慎啊!

首先要介绍的是XREP-XREQ模式:

这是比较简单的一种模式,XREQ会用到三种情景:1,汇总,2,代理分发,3,响应答复。

这里要注意,如果XREQ用于响应答复,最好只有一个XREP与它相连,因为XREQ不会指定发送目标,而会将数据均衡的摊派给所有与它有连接关系的XREP.

这里给出一个汇总式的例子:![clip_image024[1] clip_image024[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0241_thumb.png)

1. import time

2. import random

3. from threading import Thread

4. import zmq

5.

6. def worker_a(context):

7. worker = context.socket(zmq.XREQ)

8. worker.setsockopt(zmq.IDENTITY, ’A’)

9. worker.connect(“ipc://routing.ipc”)

10.

11. total = 0

12. while True:

13. request = worker.recv()

14. finished = request == ”END”

15. if finished:

16. print “A received:”, total

17. break

18. total += 1

19.

20. def worker_b(context):

21. worker = context.socket(zmq.XREQ)

22. worker.setsockopt(zmq.IDENTITY, ’B’)

23. worker.connect(“ipc://routing.ipc”)

24.

25. total = 0

26. while True:

27. request = worker.recv()

28. finished = request == ”END”

29. if finished:

30. print “B received:”, total

31. break

32. total += 1

33.

34. context = zmq.Context()

35. client = context.socket(zmq.XREP)

36. client.bind(“ipc://routing.ipc”)

37.

38. Thread(target=worker_a, args=(context,)).start()

39. Thread(target=worker_b, args=(context,)).start()

40.

41. time.sleep(1)

42.

43. for _ in xrange(10):

44. if random.randint(0, 2) > 0:

45. client.send(“A”, zmq.SNDMORE)

46. else:

47. client.send(“B”, zmq.SNDMORE)

48.

49. client.send(“This is the workload”)

50.

51. client.send(“A”, zmq.SNDMORE)

52. client.send(“END”)

53.

54. client.send(“B”, zmq.SNDMORE)

55. client.send(“END”)

56.

57. time.sleep(1) # Give 0MQ/2.0.x time to flush output

传递的数据结构:![clip_image025[1] clip_image025[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0251_thumb.png)

因为这是无应答的,比较简单,如果要应答的话,会稍微麻烦些,需要用到前面讲的POLLl来调度。在代码中有一行sleep,主要是为了等待接收端准备就绪,否则有可能像”发布/订阅”那样,丢失数据。除了XREP与PUB外,其他类型都不会存在这种问题(都会阻塞等待)。

注意:

在路由模式下,永远是不安全的,想要得到保障,就应该在得到路由信息时答复路由(回应一下)。

zeroMQ初体验-17.应答模式进阶(三)-定制路由2

博客分类:

· MQ

XREP-REQ模式:

典型的”老妈模式”,只有当她真的要听你说时,她才能听的进去。所以首先,得要REQ告诉你“她准备好了,你可以讲了”,然后,你才能倾吐…

一般来说与XREQ一样,一个REQ只能连接一个XREP(除非你想做容错,不过,不建议那样)。

实例模型:![clip_image026[1] clip_image026[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0261_thumb.png)

1. import time

2. import random

3. from threading import Thread

4.

5. import zmq

6.

7. import zhelpers

8.

9. NBR_WORKERS = 10

10.

11. def worker_thread(context):

12. worker = context.socket(zmq.REQ)

13.

14. # We use a string identity for ease here

15. zhelpers.set_id(worker)

16. worker.connect(“ipc://routing.ipc”)

17.

18. total = 0

19. while True:

20. # Tell the router we’re ready for work

21. worker.send(“ready”)

22.

23. # Get workload from router, until finished

24. workload = worker.recv()

25. finished = workload == ”END”

26. if finished:

27. print “Processed: %d tasks” % total

28. break

29. total += 1

30.

31. # Do some random work

32. time.sleep(random.random() / 10 + 10 ** -9)

33.

34. context = zmq.Context()

35. client = context.socket(zmq.XREP)

36. client.bind(“ipc://routing.ipc”)

37.

38. for _ in xrange(NBR_WORKERS):

39. Thread(target=worker_thread, args=(context,)).start()

40.

41. for _ in xrange(NBR_WORKERS * 10):

42. # LRU worker is next waiting in the queue

43. address = client.recv()

44. empty = client.recv()

45. ready = client.recv()

46.

47. client.send(address, zmq.SNDMORE)

48. client.send(“”, zmq.SNDMORE)

49. client.send(“This is the workload”)

50.

51. # Now ask mama to shut down and report their results

52. for _ in xrange(NBR_WORKERS):

53. address = client.recv()

54. empty = client.recv()

55. ready = client.recv()

56.

57. client.send(address, zmq.SNDMORE)

58. client.send(“”, zmq.SNDMORE)

59. client.send(“END”)

60.

61. time.sleep(1) # Give 0MQ/2.0.x time to flush output

传递的数据结构:![clip_image027[1] clip_image027[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0271_thumb.png)

注意点:

如果”老妈”没有和你主动联系,那么就不要向她发一个字!

XREP-REP模式:

这种模式并不属于经典的应用范畴,通常的做法是XREP-XREQ-REP,由“分销商”来负责数据的传递。不过既然有这两种类型,不妨试着联通看看~

实例模型:![clip_image028[1] clip_image028[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0281_thumb.png)

1. import time

2.

3. import zmq

4.

5. import zhelpers

6.

7. context = zmq.Context()

8. client = context.socket(zmq.XREP)

9. client.bind(“ipc://routing.ipc”)

10.

11. worker = context.socket(zmq.REP)

12. worker.setsockopt(zmq.IDENTITY, ”A”)

13. worker.connect(“ipc://routing.ipc”)

14.

15. # Wait for sockets to stabilize

16. time.sleep(1)

17.

18. client.send(“A”, zmq.SNDMORE)

19. client.send(“address 3”, zmq.SNDMORE)

20. client.send(“address 2”, zmq.SNDMORE)

21. client.send(“address 1”, zmq.SNDMORE)

22. client.send(“”, zmq.SNDMORE)

23. client.send(“This is the workload”)

24.

25. # Worker should get just the workload

26. zhelpers.dump(worker)

27.

28. # We don’t play with envelopes in the worker

29. worker.send(“This is the reply”)

30.

31. # Now dump what we got off the XREP socket…

32. zhelpers.dump(client)

数据结构:![clip_image029[1] clip_image029[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0291_thumb.png)

注意:

因为REP不像REQ那样,他是被动的,所以在往REP传递数据时,先得确定他已经存在,不然数据可就丢了。

zeroMQ初体验-18.应答模式进阶(四)-定制路由3

博客分类:

· MQ

从经典到超越经典。

首先,先回顾下经典:![clip_image030[1] clip_image030[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0301_thumb.png)

然后,扩展:![clip_image031[1] clip_image031[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0311_thumb.png)

然后,变异:![clip_image032[1] clip_image032[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0321_thumb.png)

1. import threading

2. import time

3. import zmq

4.

5. NBR_CLIENTS = 10

6. NBR_WORKERS = 3

7.

8. def worker_thread(worker_url, context, i):

9. “”” Worker using REQ socket to do LRU routing ”””

10.

11. socket = context.socket(zmq.REQ)

12.

13. identity = ”Worker-%d” % (i)

14.

15. socket.setsockopt(zmq.IDENTITY, identity) #set worker identity

16.

17. socket.connect(worker_url)

18.

19. # Tell the borker we are ready for work

20. socket.send(“READY”)

21.

22. try:

23. while True:

24.

25. # python binding seems to eat empty frames

26. address = socket.recv()

27. request = socket.recv()

28.

29. print(“%s: %s\n” %(identity, request))

30.

31. socket.send(address, zmq.SNDMORE)

32. socket.send(“”, zmq.SNDMORE)

33. socket.send(“OK”)

34.

35. except zmq.ZMQError, zerr:

36. # context terminated so quit silently

37. if zerr.strerror == ’Context was terminated’:

38. return

39. else:

40. raise zerr

41.

42.

43. def client_thread(client_url, context, i):

44. “”” Basic request-reply client using REQ socket ”””

45.

46. socket = context.socket(zmq.REQ)

47.

48. identity = ”Client-%d” % (i)

49.

50. socket.setsockopt(zmq.IDENTITY, identity) #Set client identity. Makes tracing easier

51.

52. socket.connect(client_url)

53. # Send request, get reply

54. socket.send(“HELLO”)

55. reply = socket.recv()

56. print(“%s: %s\n” % (identity, reply))

57. return

58.

59. def main():

60. “”” main method ”””

61.

62. url_worker = ”inproc://workers”

63. url_client = ”inproc://clients”

64. client_nbr = NBR_CLIENTS

65.

66. # Prepare our context and sockets

67. context = zmq.Context(1)

68. frontend = context.socket(zmq.XREP)

69. frontend.bind(url_client)

70. backend = context.socket(zmq.XREP)

71. backend.bind(url_worker)

72.

73.

74.

75. # create workers and clients threads

76. for i in range(NBR_WORKERS):

77. thread = threading.Thread(target=worker_thread, args=(url_worker, context, i, ))

78. thread.start()

79.

80. for i in range(NBR_CLIENTS):

81. thread_c = threading.Thread(target=client_thread, args=(url_client, context, i, ))

82. thread_c.start()

83.

84. # Logic of LRU loop

85. # - Poll backend always, frontend only if 1+ worker ready

86. # - If worker replies, queue worker as ready and forward reply

87. # to client if necessary

88. # - If client requests, pop next worker and send request to it

89.

90. # Queue of available workers

91. available_workers = 0

92. workers_list = []

93.

94. # init poller

95. poller = zmq.Poller()

96.

97. # Always poll for worker activity on backend

98. poller.register(backend, zmq.POLLIN)

99.

100. # Poll front-end only if we have available workers

101. poller.register(frontend, zmq.POLLIN)

102.

103. while True:

104.

105. socks = dict(poller.poll())

106. # Handle worker activity on backend

107. if (backend in socks and socks[backend] == zmq.POLLIN):

108.

109. # Queue worker address for LRU routing

110. worker_addr = backend.recv()

111.

112. assert available_workers < NBR_WORKERS

113.

114. # add worker back to the list of workers

115. available_workers += 1

116. workers_list.append(worker_addr)

117.

118. # Second frame is empty

119. empty = backend.recv()

120. assert empty == ””

121.

122. # Third frame is READY or else a client reply address

123. client_addr = backend.recv()

124.

125. # If client reply, send rest back to frontend

126. if client_addr != ”READY”:

127.

128. # Following frame is empty

129. empty = backend.recv()

130. assert empty == ””

131.

132. reply = backend.recv()

133.

134. frontend.send(client_addr, zmq.SNDMORE)

135. frontend.send(“”, zmq.SNDMORE)

136. frontend.send(reply)

137.

138. client_nbr -= 1

139.

140. if client_nbr == 0:

141. break # Exit after N messages

142.

143. # poll on frontend only if workers are available

144. if available_workers > 0:

145.

146. if (frontend in socks and socks[frontend] == zmq.POLLIN):

147. # Now get next client request, route to LRU worker

148. # Client request is [address][empty][request]

149. client_addr = frontend.recv()

150.

151. empty = frontend.recv()

152. assert empty == ””

153.

154. request = frontend.recv()

155.

156. # Dequeue and drop the next worker address

157. available_workers -= 1

158. worker_id = workers_list.pop()

159.

160. backend.send(worker_id, zmq.SNDMORE)

161. backend.send(“”, zmq.SNDMORE)

162. backend.send(client_addr, zmq.SNDMORE)

163. backend.send(request)

164.

165. #out of infinite loop: do some housekeeping

166. time.sleep (1)

167.

168. frontend.close()

169. backend.close()

170. context.term()

171.

172.

173. if name == ”main”:

174. main()

client发出的数据结构:![clip_image033[1] clip_image033[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0331_thumb.png)

路由处理成:![clip_image034[1] clip_image034[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0341_thumb.png)

再转给worker成:![clip_image035[1] clip_image035[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0351_thumb.png)

工人处理的数据:![clip_image036[1] clip_image036[1]](http://www.wy182000.com/wordpress/wp-content/uploads/2013/04/clip_image0361_thumb.png)

由worker到client是一个逆序过程,不过因为两边都是REQ类型,所以其实是一致的。

[补]:

通常,上层的api会帮我们做一些事,免去了逐步封装数据的麻烦,比如在python中,最终代码会是这个样子:

1. import threading

2. import time

3. import zmq

4.

5. NBR_CLIENTS = 10

6. NBR_WORKERS = 3

7.

8. def worker_thread(worker_url, context, i):

9. “”” Worker using REQ socket to do LRU routing ”””

10.

11. socket = context.socket(zmq.REQ)

12.

13. identity = ”Worker-%d” % (i)

14.

15. socket.setsockopt(zmq.IDENTITY, identity) #set worker identity

16.

17. socket.connect(worker_url)

18.

19. # Tell the borker we are ready for work

20. socket.send(“READY”)

21.

22. try:

23. while True:

24.

25. [address, request] = socket.recv_multipart()

26.

27. print(“%s: %s\n” %(identity, request))

28.

29. socket.send_multipart([address, ””, ”OK”])

30.

31. except zmq.ZMQError, zerr:

32. # context terminated so quit silently

33. if zerr.strerror == ’Context was terminated’:

34. return

35. else:

36. raise zerr

37.

38.

39. def client_thread(client_url, context, i):

40. “”” Basic request-reply client using REQ socket ”””

41.

42. socket = context.socket(zmq.REQ)

43.

44. identity = ”Client-%d” % (i)

45.

46. socket.setsockopt(zmq.IDENTITY, identity) #Set client identity. Makes tracing easier

47.

48. socket.connect(client_url)

49.

50. # Send request, get reply

51. socket.send(“HELLO”)

52.

53. reply = socket.recv()

54.

55. print(“%s: %s\n” % (identity, reply))

56.

57. return

58.

59.

60. def main():

61. “”” main method ”””

62.

63. url_worker = ”inproc://workers”

64. url_client = ”inproc://clients”

65. client_nbr = NBR_CLIENTS

66.

67. # Prepare our context and sockets

68. context = zmq.Context(1)

69. frontend = context.socket(zmq.XREP)

70. frontend.bind(url_client)

71. backend = context.socket(zmq.XREP)

72. backend.bind(url_worker)

73.

74.

75.

76. # create workers and clients threads

77. for i in range(NBR_WORKERS):

78. thread = threading.Thread(target=worker_thread, args=(url_worker, context, i, ))

79. thread.start()

80.

81. for i in range(NBR_CLIENTS):

82. thread_c = threading.Thread(target=client_thread, args=(url_client, context, i, ))

83. thread_c.start()

84.

85. # Logic of LRU loop

86. # - Poll backend always, frontend only if 1+ worker ready

87. # - If worker replies, queue worker as ready and forward reply

88. # to client if necessary

89. # - If client requests, pop next worker and send request to it

90.

91. # Queue of available workers

92. available_workers = 0

93. workers_list = []

94.

95. # init poller

96. poller = zmq.Poller()

97.

98. # Always poll for worker activity on backend

99. poller.register(backend, zmq.POLLIN)

100.

101. # Poll front-end only if we have available workers

102. poller.register(frontend, zmq.POLLIN)

103.

104. while True:

105.

106. socks = dict(poller.poll())

107.

108. # Handle worker activity on backend

109. if (backend in socks and socks[backend] == zmq.POLLIN):

110.

111. # Queue worker address for LRU routing

112. message = backend.recv_multipart()

113.

114. assert available_workers < NBR_WORKERS

115.

116. worker_addr = message[0]

117.

118. # add worker back to the list of workers

119. available_workers += 1

120. workers_list.append(worker_addr)

121.

122. # Second frame is empty

123. empty = message[1]

124. assert empty == ””

125.

126. # Third frame is READY or else a client reply address

127. client_addr = message[2]

128.

129. # If client reply, send rest back to frontend

130. if client_addr != ”READY”:

131.

132. # Following frame is empty

133. empty = message[3]

134. assert empty == ””

135.

136. reply = message[4]

137.

138. frontend.send_multipart([client_addr, ””, reply])

139.

140. client_nbr -= 1

141.

142. if client_nbr == 0:

143. break # Exit after N messages

144.

145. # poll on frontend only if workers are available

146. if available_workers > 0:

147.

148. if (frontend in socks and socks[frontend] == zmq.POLLIN):

149. # Now get next client request, route to LRU worker

150. # Client request is [address][empty][request]

151.

152. [client_addr, empty, request ] = frontend.recv_multipart()

153.

154. assert empty == ””

155.

156. # Dequeue and drop the next worker address

157. available_workers -= 1

158. worker_id = workers_list.pop()

159.

160. backend.send_multipart([worker_id, ””, client_addr, request])

161.

162.

163. #out of infinite loop: do some housekeeping

164. time.sleep (1)

165.

166. frontend.close()

167. backend.close()

168. context.term()

169.

170.

171. if name == ”main”:

172. main()

zeroMQ初体验-19.应答模式进阶(五)-异步式应答

博客分类:

· MQ

1. import zmq

2. import threading

3. import time

4. from random import choice

5.

6. class ClientTask(threading.Thread):

7. “””ClientTask”””

8. def init(self):

9. threading.Thread.init (self)

10.

11. def run(self):

12. context = zmq.Context()

13. socket = context.socket(zmq.XREQ)

14. identity = ’worker-%d’ % (choice([0,1,2,3,4,5,6,7,8,9]))

15. socket.setsockopt(zmq.IDENTITY, identity )

16. socket.connect(‘tcp://localhost:5570’)

17. print ‘Client %s started’ % (identity)

18. poll = zmq.Poller()

19. poll.register(socket, zmq.POLLIN)

20. reqs = 0

21. while True:

22. for i in xrange(5):

23. sockets = dict(poll.poll(1000))

24. if socket in sockets:

25. if sockets[socket] == zmq.POLLIN:

26. msg = socket.recv()

27. print ‘%s: %s\n’ % (identity, msg)

28. del msg

29. reqs = reqs + 1

30. print ‘Req #%d sent..’ % (reqs)

31. socket.send(‘request #%d’ % (reqs))

32.

33. socket.close()

34. context.term()

35.

36. class ServerTask(threading.Thread):

37. “””ServerTask”””

38. def init(self):

39. threading.Thread.init (self)

40.

41. def run(self):

42. context = zmq.Context()

43. frontend = context.socket(zmq.XREP)

44. frontend.bind(‘tcp://*:5570’)

45.

46. backend = context.socket(zmq.XREQ)

47. backend.bind(‘inproc://backend’)

48.

49. workers = []

50. for i in xrange(5):

51. worker = ServerWorker(context)

52. worker.start()

53. workers.append(worker)

54.

55. poll = zmq.Poller()

56. poll.register(frontend, zmq.POLLIN)

57. poll.register(backend, zmq.POLLIN)

58.

59. while True:

60. sockets = dict(poll.poll())

61. if frontend in sockets:

62. if sockets[frontend] == zmq.POLLIN:

63. msg = frontend.recv()

64. print ‘Server received %s’ % (msg)

65. backend.send(msg)

66. if backend in sockets:

67. if sockets[backend] == zmq.POLLIN:

68. msg = backend.recv()

69. frontend.send(msg)

70.

71. frontend.close()

72. backend.close()

73. context.term()

74.

75. class ServerWorker(threading.Thread):

76. “””ServerWorker”””

77. def init(self, context):

78. threading.Thread.init (self)

79. self.context = context

80.

81. def run(self):

82. worker = self.context.socket(zmq.XREQ)

83. worker.connect(‘inproc://backend’)

84. print ‘Worker started’

85. while True:

86. msg = worker.recv()

87. print ‘Worker received %s’ % (msg)

88. replies = choice(xrange(5))

89. for i in xrange(replies):

90. time.sleep(1/choice(range(1,10)))

91. worker.send(msg)

92. del msg

93.

94. worker.close()

95.

96. def main():

97. “””main function”””

98. server = ServerTask()

99. server.start()

100. for i in xrange(3):

101. client = ClientTask()

102. client.start()

103.

104. server.join()

105.

106.

107. if name == ”main”:

108. main()

作为一个异步的服务器,详图应该是这样的:

这里的数据传递顺序是这样的:

1. client server frontend worker

2. [ XREQ ]<—->[ XREP <—-> XREQ <—-> XREQ ]

3. 1 part 2 parts 2 parts

在这里有可能碰到一个比较经典的c/s问题:

c端太多,耗尽s端资源怎么办?

这就需要靠谱些的机制了,比如通过“心跳”来确定是否应该释放这个c端的资源等。当然,那就是另外一个话题了。

zeroMQ初体验-20.应答模式进阶(六)-多对多路由模式

博客分类:

· MQ

1. import zmq

2. import time

3. import zhelpers

4.

5. context = zmq.Context()

6.

7. worker = context.socket(zmq.XREP)

8. worker.setsockopt(zmq.IDENTITY, ”WORKER”)

9. worker.bind(“ipc://rtrouter.ipc”)

10.

11. server = context.socket(zmq.XREP)

12. server.setsockopt(zmq.IDENTITY, ”SERVER”)

13. server.connect(“ipc://rtrouter.ipc”)

14.

15. time.sleep(1)

16.

17. server.send_multipart([“WORKER”, ””, ”send to worker”])

18. zhelpers.dump(worker)

19.

20. worker.send_multipart([“SERVER”, ””, ”send to server”])

21. zhelpers.dump(server)

注意:

虽然看起来这样很美好,不过,潜在着一个巨大的风险:混乱。同一个层级上的路由必须要通过命名来唯一化,以便减少出现混乱的可能性。

zeroMQ初体验-21.应答模式进阶(七)-云计算

博客分类:

· MQ

这里给出了一个最近很火的”云计算”案例。

定义:

在各种各样的硬件设备上运行着N多的worker,而任意一个worker都能够独立解决一个问题。每一个集群有这样的设备成千上百个,而同时又有一打这样的集群互相连接交互,于是,这么一个总的集合称为“云”,而其提供的服务称为“云计算”。

在“云中”的任一设备或集群都可以做到”进出自由”、任何崩溃的worker都能被检测和重启,那么,基本上就可以称为靠谱的云计算了。

首先,是一个独立的集群:

是不是很眼熟?其实这里已经有过介绍。

然后,进行扩展到多个集群:

这张图中有一个很明显的问题:两个集群间的client和worker如何互相访问?

此处有两种解决方案:

1.

这个看起来还不错,不过却有”忙者恒忙”的坏处:一个worker说“我ok了”,两个路由都知道了,同一时刻都分配了任务给他.这不是我们想要的。

2.

这个看上去更加简洁,只有中间商之间互相交换资源以达成目标。这其实是一个较”经济人”算法复杂些的算法–“经济人”互相又是”分包商”的角色。

现在,咱们选择第二种方案,那么两个中间件互联的方案选择又会衍生出好几种方式,在这里,先给出最简单的(也是我们一直在用的)“应答方案”,将中间件再组合成类c/s应答形式:

如此,似乎又产生了一个新问题(太过简单本身也是个问题啊):传统的c/s应答模式一次只能响应一个请求,然后。。就没有然后了。so,这里中间件的连接更靠谱的是使用异步连接。

除此之外,文中还给出了一个类似DNS的方案,中间件之间以“发布/订阅”的方式来交换各自的资源情况,再以“异步应答”来交换task。

在即将的案例前,良好的命名规范是非常必要的。

这里会出现三组“插座”:

1.集群内部的req/res:localfe,localbe

2.集群间的req/res:cloudfe,cloudbe

3.集群间的资源状态:statefe,statebe

最终,这个中间件会是这个样子:

下面,我们会将中间件的插座适当的分离。

1.资源状态:

1. import zmq

2. import time

3. import random

4.

5. def main(args):

6.

7. myself = args[1]

8. print “Hello, I am”, myself

9.

10. context = zmq.Context()

11.

12. # State Back-End

13. statebe = context.socket(zmq.PUB)

14.

15. # State Front-End

16. statefe = context.socket(zmq.SUB)

17. statefe.setsockopt(zmq.SUBSCRIBE, ”)

18.

19. bind_address = ”ipc://” + myself + ”-state.ipc”

20. statebe.bind(bind_address)

21.

22. for i in range(len(args) - 2):

23. endpoint = ”ipc://” + args[i + 2] + ”-state.ipc”

24. statefe.connect(endpoint)

25. time.sleep(1.0)

26.

27. poller = zmq.Poller()

28. poller.register(statefe, zmq.POLLIN)

29.

30. while True:

31.

32. ########## Solution with poll() ##########

33. socks = dict(poller.poll(1000))

34.

35. try:

36. # Handle incoming status message

37. if socks[statefe] == zmq.POLLIN:

38. msg = statefe.recv_multipart()

39. print ‘Received:’, msg

40.

41. except KeyError:

42. # Send our address and a random value

43. # for worker availability

44. msg = []

45. msg.append(bind_address)

46. msg.append(str(random.randrange(1, 10)))

47. statebe.send_multipart(msg)

48. ##################################

49.

50. ######### Solution with select() #########

51. # (pollin, pollout, pollerr) = zmq.select([statefe], [], [], 1)

52. #

53. # if len(pollin) > 0 and pollin[0] == statefe:

54. # # Handle incoming status message

55. # msg = statefe.recv_multipart()

56. # print ’Received:’, msg

57. #

58. # else:

59. # # Send our address and a random value

60. # # for worker availability

61. # msg = []

62. # msg.append(bind_address)

63. # msg.append(str(random.randrange(1, 10)))

64. # statebe.send_multipart(msg)

65. ##################################

66.

67. poller.unregister(statefe)

68. time.sleep(1.0)

69.

70. if name == ’main’:

71. import sys

72.

73. if len(sys.argv) < 2:

74. print “Usage: peering.py <myself> <peer_1> … <peer_N>”

75. raise SystemExit

76.

77. main(sys.argv)

1. require”zmq”

2. require”zmq.poller”

3. require”zmq.threads”

4. require”zmsg”

5.

6. local tremove = table.remove

7.

8. local NBR_CLIENTS = 10

9. local NBR_WORKERS = 3

10.

11. local pre_code = [[

12. local self, seed = …

13. local zmq = require”zmq”

14. local zmsg = require”zmsg”

15. require”zhelpers”

16. math.randomseed(seed)

17. local context = zmq.init(1)

18.

19. ]]

20.

21. – Request-reply client using REQ socket

22. —

23. local client_task = pre_code .. [[

24. local client = context:socket(zmq.REQ)

25. local endpoint = string.format(“ipc://%s-localfe.ipc”, self)

26. assert(client:connect(endpoint))

27.

28. while true do

29. – Send request, get reply

30. local msg = zmsg.new (“HELLO”)

31. msg:send(client)

32. msg = zmsg.recv (client)

33. printf (“I: client status: %s\n”, msg:body())

34. end

35. – We never get here but if we did, this is how we’d exit cleanly

36. client:close()

37. context:term()

38. ]]

39.

40. – Worker using REQ socket to do LRU routing

41. —

42. local worker_task = pre_code .. [[

43. local worker = context:socket(zmq.REQ)

44. local endpoint = string.format(“ipc://%s-localbe.ipc”, self)

45. assert(worker:connect(endpoint))

46.

47. – Tell broker we’re ready for work

48. local msg = zmsg.new (“READY”)

49. msg:send(worker)

50.

51. while true do

52. msg = zmsg.recv (worker)

53. – Do some ’work’

54. s_sleep (1000)

55. msg:body_fmt(“OK - %04x”, randof (0x10000))

56. msg:send(worker)

57. end

58. – We never get here but if we did, this is how we’d exit cleanly

59. worker:close()

60. context:term()

61. ]]

62.

63. – First argument is this broker’s name

64. – Other arguments are our peers’ names

65. —

66. s_version_assert (2, 1)

67. if (#arg < 1) then

68. printf (“syntax: peering2 me doyouend…\n”)

69. os.exit(-1)

70. end

71. – Our own name; in practice this‘d be configured per node

72. local self = arg[1]

73. printf (“I: preparing broker at %s…\n”, self)

74. math.randomseed(os.time())

75.

76. – Prepare our context and sockets

77. local context = zmq.init(1)

78.

79. – Bind cloud frontend to endpoint

80. local cloudfe = context:socket(zmq.XREP)

81. local endpoint = string.format(“ipc://%s-cloud.ipc”, self)

82. cloudfe:setopt(zmq.IDENTITY, self)

83. assert(cloudfe:bind(endpoint))

84.

85. – Connect cloud backend to all peers

86. local cloudbe = context:socket(zmq.XREP)

87. cloudbe:setopt(zmq.IDENTITY, self)

88.

89. local peers = {}

90. for n=2,#arg do

91. local peer = arg[n]

92. – add peer name to peers list.

93. peers[#peers + 1] = peer

94. peers[peer] = true – map peer’s name to ’true‘ for fast lookup

95. printf (“I: connecting to cloud frontend at ’%s’\n”, peer)

96. local endpoint = string.format(“ipc://%s-cloud.ipc”, peer)

97. assert(cloudbe:connect(endpoint))

98. end

99. – Prepare local frontend and backend

100. local localfe = context:socket(zmq.XREP)

101. local endpoint = string.format(“ipc://%s-localfe.ipc”, self)

102. assert(localfe:bind(endpoint))

103.

104. local localbe = context:socket(zmq.XREP)

105. local endpoint = string.format(“ipc://%s-localbe.ipc”, self)

106. assert(localbe:bind(endpoint))

107.

108. – Get user to tell us when we can start…

109. printf (“Press Enter when all brokers are started: ”)

110. io.read(‘*l’)

111.

112. – Start local workers

113. local workers = {}

114. for n=1,NBR_WORKERS do

115. local seed = os.time() + math.random()

116. workers[n] = zmq.threads.runstring(nil, worker_task, self, seed)

117. workers[n]:start(true)

118. end

119. – Start local clients

120. local clients = {}

121. for n=1,NBR_CLIENTS do

122. local seed = os.time() + math.random()

123. clients[n] = zmq.threads.runstring(nil, client_task, self, seed)

124. clients[n]:start(true)

125. end

126.

127. – Interesting part

128. – ————————————————————-

129. – Request-reply flow

130. – - Poll backends and process local/cloud replies

131. – - While worker available, route localfe to local or cloud

132.

133. – Queue of available workers

134. local worker_queue = {}

135. local backends = zmq.poller(2)

136.

137. local function send_reply(msg)

138. local address = msg:address()

139. – Route reply to cloud if it’s addressed to a broker

140. if peers[address] then

141. msg:send(cloudfe) – reply is for a peer.

142. else

143. msg:send(localfe) – reply is for a local client.

144. end

145. end

146.

147. backends:add(localbe, zmq.POLLIN, function()

148. local msg = zmsg.recv(localbe)

149.

150. – Use worker address for LRU routing

151. worker_queue[#worker_queue + 1] = msg:unwrap()

152. – if reply is not ”READY” then route reply back to client.

153. if (msg:address() ~= ”READY”) then

154. send_reply(msg)

155. end

156. end)

157.

158. backends:add(cloudbe, zmq.POLLIN, function()

159. local msg = zmsg.recv(cloudbe)

160. – We don’t use peer broker address for anything

161. msg:unwrap()

162. – send reply back to client.

163. send_reply(msg)

164. end)

165.

166. local frontends = zmq.poller(2)

167. local localfe_ready = false

168. local cloudfe_ready = false

169.

170. frontends:add(localfe, zmq.POLLIN, function() localfe_ready = true end)

171. frontends:add(cloudfe, zmq.POLLIN, function() cloudfe_ready = true end)

172.

173. while true do

174. local timeout = (#worker_queue > 0) and 1000000 or -1

175. – If we have no workers anyhow, wait indefinitely

176. rc = backends:poll(timeout)

177. assert (rc >= 0)

178.

179. – Now route as many clients requests as we can handle

180. –

181. while (#worker_queue > 0) do

182. rc = frontends:poll(0)

183. assert (rc >= 0)

184. local reroutable = false

185. local msg

186. – We’ll do peer brokers first, to prevent starvation

187. if (cloudfe_ready) then

188. cloudfe_ready = false – reset flag

189. msg = zmsg.recv (cloudfe)

190. reroutable = false

191. elseif (localfe_ready) then

192. localfe_ready = false – reset flag

193. msg = zmsg.recv (localfe)

194. reroutable = true

195. else

196. break; – No work, go back to backends

197. end

198.

199. – If reroutable, send to cloud 20% of the time

200. – Here we’d normally use cloud status information

201. –

202. local percent = randof (5)

203. if (reroutable and #peers > 0 and percent == 0) then

204. – Route to random broker peer

205. local random_peer = randof (#peers) + 1

206. msg:wrap(peers[random_peer], nil)

207. msg:send(cloudbe)

208. else

209. – Dequeue and drop the next worker address

210. local worker = tremove(worker_queue, 1)

211. msg:wrap(worker, ””)

212. msg:send(localbe)

213. end

214. end

215. end

216. – We never get here but clean up anyhow

217. localbe:close()

218. cloudbe:close()

219. localfe:close()

220. cloudfe:close()

221. context:term()

注意:

这里是lua代码,官方没有给出Python,改天补齐~

3.合并:

1. require”zmq”

2. require”zmq.poller”

3. require”zmq.threads”

4. require”zmsg”

5.

6. local tremove = table.remove

7.

8. local NBR_CLIENTS = 10

9. local NBR_WORKERS = 5

10.

11. local pre_code = [[

12. local self, seed = …

13. local zmq = require”zmq”

14. local zmsg = require”zmsg”

15. require”zhelpers”

16. math.randomseed(seed)

17. local context = zmq.init(1)

18.

19. ]]

20.

21. – Request-reply client using REQ socket

22. – To simulate load, clients issue a burst of requests and then

23. – sleep for a random period.

24. —

25. local client_task = pre_code .. [[

26. require”zmq.poller”

27.

28. local client = context:socket(zmq.REQ)

29. local endpoint = string.format(“ipc://%s-localfe.ipc”, self)

30. assert(client:connect(endpoint))

31.

32. local monitor = context:socket(zmq.PUSH)

33. local endpoint = string.format(“ipc://%s-monitor.ipc”, self)

34. assert(monitor:connect(endpoint))

35.

36. local poller = zmq.poller(1)

37. local task_id = nil

38.

39. poller:add(client, zmq.POLLIN, function()

40. local msg = zmsg.recv (client)

41. – Worker is supposed to answer us with our task id

42. assert (msg:body() == task_id)

43. – mark task as processed.

44. task_id = nil

45. end)

46. local is_running = true

47. while is_running do

48. s_sleep (randof (5) * 1000)

49.

50. local burst = randof (15)

51. while (burst > 0) do

52. burst = burst - 1

53. – Send request with random hex ID

54. task_id = string.format(“%04X”, randof (0x10000))

55. local msg = zmsg.new(task_id)

56. msg:send(client)

57.

58. – Wait max ten seconds for a reply, then complain

59. rc = poller:poll(10 * 1000000)

60. assert (rc >= 0)

61.

62. if task_id then

63. local msg = zmsg.new()

64. msg:body_fmt(

65. “E: CLIENT EXIT - lost task %s”, task_id)

66. msg:send(monitor)

67. – exit event loop

68. is_running = false

69. break

70. end

71. end

72. end

73. – We never get here but if we did, this is how we’d exit cleanly

74. client:close()

75. monitor:close()

76. context:term()

77. ]]

78.

79. – Worker using REQ socket to do LRU routing

80. —

81. local worker_task = pre_code .. [[

82. local worker = context:socket(zmq.REQ)

83. local endpoint = string.format(“ipc://%s-localbe.ipc”, self)

84. assert(worker:connect(endpoint))

85.

86. – Tell broker we’re ready for work

87. local msg = zmsg.new (“READY”)

88. msg:send(worker)

89.

90. while true do

91. – Workers are busy for 0/1/2 seconds

92. msg = zmsg.recv (worker)

93. s_sleep (randof (2) * 1000)

94. msg:send(worker)

95. end

96. – We never get here but if we did, this is how we’d exit cleanly

97. worker:close()

98. context:term()

99. ]]

100.

101. – First argument is this broker’s name

102. – Other arguments are our peers’ names

103. —

104. s_version_assert (2, 1)

105. if (#arg < 1) then

106. printf (“syntax: peering3 me doyouend…\n”)

107. os.exit(-1)

108. end

109. – Our own name; in practice this‘d be configured per node

110. local self = arg[1]

111. printf (“I: preparing broker at %s…\n”, self)

112. math.randomseed(os.time())

113.

114. – Prepare our context and sockets

115. local context = zmq.init(1)

116.

117. – Bind cloud frontend to endpoint

118. local cloudfe = context:socket(zmq.XREP)

119. local endpoint = string.format(“ipc://%s-cloud.ipc”, self)

120. cloudfe:setopt(zmq.IDENTITY, self)

121. assert(cloudfe:bind(endpoint))

122.

123. – Bind state backend / publisher to endpoint

124. local statebe = context:socket(zmq.PUB)

125. local endpoint = string.format(“ipc://%s-state.ipc”, self)

126. assert(statebe:bind(endpoint))

127.

128. – Connect cloud backend to all peers

129. local cloudbe = context:socket(zmq.XREP)

130. cloudbe:setopt(zmq.IDENTITY, self)

131.

132. for n=2,#arg do

133. local peer = arg[n]

134. printf (“I: connecting to cloud frontend at ’%s’\n”, peer)

135. local endpoint = string.format(“ipc://%s-cloud.ipc”, peer)

136. assert(cloudbe:connect(endpoint))

137. end

138. – Connect statefe to all peers

139. local statefe = context:socket(zmq.SUB)

140. statefe:setopt(zmq.SUBSCRIBE, ””, 0)

141.

142. local peers = {}

143. for n=2,#arg do

144. local peer = arg[n]

145. – add peer name to peers list.

146. peers[#peers + 1] = peer

147. peers[peer] = 0 – set peer’s initial capacity to zero.

148. printf (“I: connecting to state backend at ’%s’\n”, peer)

149. local endpoint = string.format(“ipc://%s-state.ipc”, peer)

150. assert(statefe:connect(endpoint))

151. end

152. – Prepare local frontend and backend

153. local localfe = context:socket(zmq.XREP)

154. local endpoint = string.format(“ipc://%s-localfe.ipc”, self)

155. assert(localfe:bind(endpoint))

156.

157. local localbe = context:socket(zmq.XREP)

158. local endpoint = string.format(“ipc://%s-localbe.ipc”, self)

159. assert(localbe:bind(endpoint))

160.

161. – Prepare monitor socket

162. local monitor = context:socket(zmq.PULL)

163. local endpoint = string.format(“ipc://%s-monitor.ipc”, self)

164. assert(monitor:bind(endpoint))

165.

166. – Start local workers

167. local workers = {}

168. for n=1,NBR_WORKERS do

169. local seed = os.time() + math.random()

170. workers[n] = zmq.threads.runstring(nil, worker_task, self, seed)

171. workers[n]:start(true)

172. end

173. – Start local clients

174. local clients = {}

175. for n=1,NBR_CLIENTS do

176. local seed = os.time() + math.random()

177. clients[n] = zmq.threads.runstring(nil, client_task, self, seed)

178. clients[n]:start(true)

179. end

180.

181. – Interesting part

182. – ————————————————————-

183. – Publish-subscribe flow

184. – - Poll statefe and process capacity updates

185. – - Each time capacity changes, broadcast new value

186. – Request-reply flow

187. – - Poll primary and process local/cloud replies

188. – - While worker available, route localfe to local or cloud

189.

190. – Queue of available workers

191. local local_capacity = 0

192. local cloud_capacity = 0

193. local worker_queue = {}

194. local backends = zmq.poller(2)

195.

196. local function send_reply(msg)

197. local address = msg:address()

198. – Route reply to cloud if it’s addressed to a broker

199. if peers[address] then

200. msg:send(cloudfe) – reply is for a peer.

201. else

202. msg:send(localfe) – reply is for a local client.

203. end

204. end

205.

206. backends:add(localbe, zmq.POLLIN, function()

207. local msg = zmsg.recv(localbe)

208.

209. – Use worker address for LRU routing

210. local_capacity = local_capacity + 1

211. worker_queue[local_capacity] = msg:unwrap()

212. – if reply is not ”READY” then route reply back to client.

213. if (msg:address() ~= ”READY”) then

214. send_reply(msg)

215. end

216. end)

217.

218. backends:add(cloudbe, zmq.POLLIN, function()

219. local msg = zmsg.recv(cloudbe)

220.

221. – We don’t use peer broker address for anything

222. msg:unwrap()

223. – send reply back to client.

224. send_reply(msg)

225. end)

226.

227. backends:add(statefe, zmq.POLLIN, function()

228. local msg = zmsg.recv (statefe)

229. – TODO: track capacity for each peer

230. cloud_capacity = tonumber(msg:body())

231. end)

232.

233. backends:add(monitor, zmq.POLLIN, function()

234. local msg = zmsg.recv (monitor)

235. printf(“%s\n”, msg:body())

236. end)

237.

238. local frontends = zmq.poller(2)

239. local localfe_ready = false

240. local cloudfe_ready = false

241.

242. frontends:add(localfe, zmq.POLLIN, function() localfe_ready = true end)

243. frontends:add(cloudfe, zmq.POLLIN, function() cloudfe_ready = true end)

244.

245. local MAX_BACKEND_REPLIES = 20

246.

247. while true do

248. – If we have no workers anyhow, wait indefinitely

249. local timeout = (local_capacity > 0) and 1000000 or -1

250. local rc, err = backends:poll(timeout)

251. assert (rc >= 0, err)

252.

253. – Track if capacity changes during this iteration

254. local previous = local_capacity

255.

256. – Now route as many clients requests as we can handle

257. – - If we have local capacity we poll both localfe and cloudfe

258. – - If we have cloud capacity only, we poll just localfe

259. – - Route any request locally if we can, else to cloud

260. –

261. while ((local_capacity + cloud_capacity) > 0) do

262. local rc, err = frontends:poll(0)

263. assert (rc >= 0, err)

264.

265. if (localfe_ready) then

266. localfe_ready = false

267. msg = zmsg.recv (localfe)

268. elseif (cloudfe_ready and local_capacity > 0) then

269. cloudfe_ready = false

270. – we have local capacity poll cloud frontend for work.

271. msg = zmsg.recv (cloudfe)

272. else

273. break; – No work, go back to primary

274. end

275.

276. if (local_capacity > 0) then

277. – Dequeue and drop the next worker address

278. local worker = tremove(worker_queue, 1)

279. local_capacity = local_capacity - 1

280. msg:wrap(worker, ””)

281. msg:send(localbe)

282. else

283. – Route to random broker peer

284. printf (“I: route request %s to cloud…\n”,

285. msg:body())

286. local random_peer = randof (#peers) + 1

287. msg:wrap(peers[random_peer], nil)

288. msg:send(cloudbe)

289. end

290. end

291. if (local_capacity ~= previous) then

292. – Broadcast new capacity

293. local msg = zmsg.new()

294. – TODO: send our name with capacity.

295. msg:body_fmt(“%d”, local_capacity)

296. – We stick our own address onto the envelope

297. msg:wrap(self, nil)

298. msg:send(statebe)

299. end

300. end

301. – We never get here but clean up anyhow

302. localbe:close()

303. cloudbe:close()

304. localfe:close()

305. cloudfe:close()

306. statefe:close()

307. monitor:close()

308. context:term()

ok,终于,一个完整的“云端”呈现了出来(虽然只用了一个进程)。不过从代码中,可以很清晰的划分各个模块。

不过,这里还是不可避免的涉及到了数据的安全性:如果其他的集群down了怎么办?通过更短时间的状态更新?似乎并不治本。或许一个回复链路可以解决。好吧,那是之后要解决的问题了。

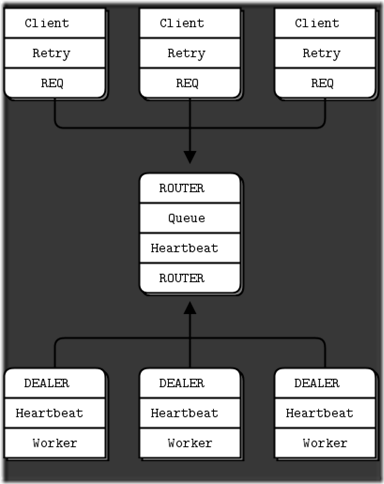

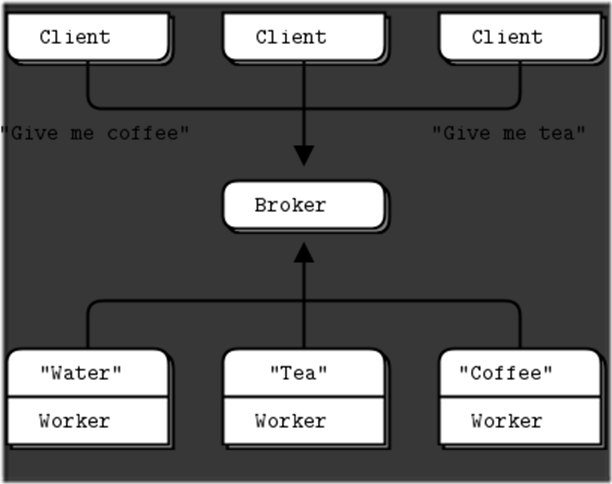

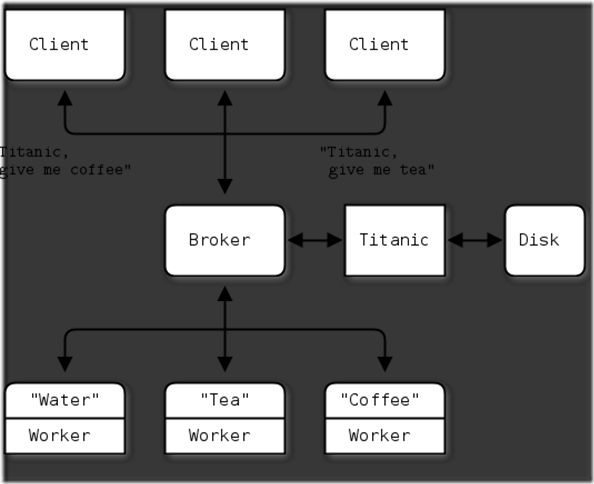

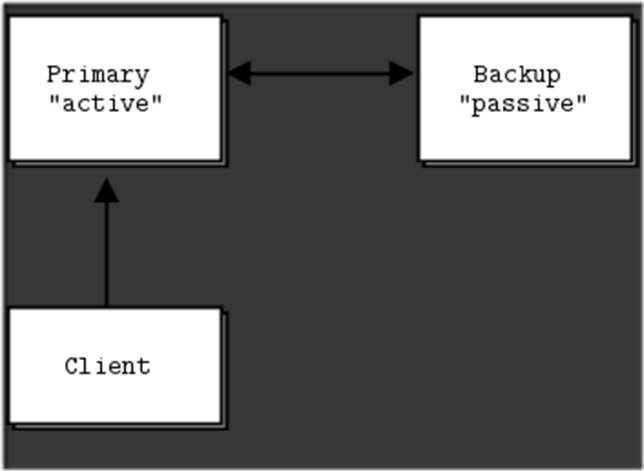

zeroMQ初体验-22.可靠性-总览

博客分类:

· MQ

在开篇就从曾对zeromq的可靠性做过质疑,不过,作为一个雄心勃勃的项目,这自然不能成为它的软肋,于是乎,就有了这一完整的章节来介绍和提供“提升可靠性”的解决方案。

这一章节总共会介绍如下一些具备通用性的模式:

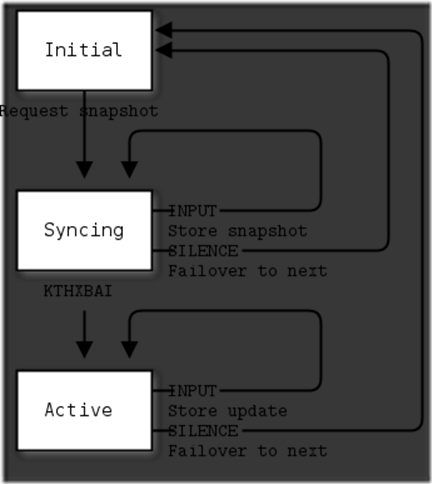

· 懒惰的海盗模式:由客户端来保证请求、链路的可靠性。

· 海盗的简单模式:通过LRU队列来保证请求/应答的可靠性。

· 偏执的海盗模式:通过心跳机制来确保链路的通畅。

· 管家模式:由服务器端来保证请求、链路的可靠性。

· 硬盘模式:通过磁盘的同步来确保在不稳定的链路下提供稳定的数据服务。

· 主从模式:通过主从备份来保证服务的可靠性。

· 自由模式:通过冗余的中间件来实现服务的可靠性。

(这边由于没有弄明白原作者的一些比喻,所以,就当是索引吧,主要可从解释中窥得一斑)

关于可靠性:

为了搞清楚什么是可靠性,不妨从他的反面–“故障”来看看。如果可以处理一种可被预测的”故障”,那么就可以认为,系统对于这种”故障”是具有可靠性的。当然,仅仅是对于这个故障。那么,只要能预测足够的故障,并能做到对这些故障进行相应处理。自然整个系统的可靠性将大大增强。下面将列出一些常见的故障(按出现频率从高到低排列):

1,应用程序:无论如何,似乎这都无法被避免,这些架在zeromq之上的应用程序总是会出现“接口撞车”、“消费能力不足导致内存溢出”、“停止响应请求”等待问题。

2,当然,作为整个框架的基础,zeromq本身的代码也会出现“死亡”、“内存溢出”等麻烦。不过,相对而言,我们应该相信他是可靠的(不然用他做什么)。

3,队列溢出:这个溢出可以是可预料的(因为消费能力不足而丢弃一些数据)。

4,物理层面上的链路问题:这是一个隐藏的故障,通常来说,zeromq会进行连接重试,不过,不可避免的会出现间歇性的丢包问题。

5,硬件问题:没有办法,至少基于该硬件的软体都可以理解为“故障”了。

6,链路上的丢包:数据死在路上了…

7,所有的数据中心/云端 地震、火山爆发、杯具了(e..好吧)

想要完整的解决方案来覆盖上面的一系列问题,对于本教程而言着实有些苛求了。所以,之后的方案相对会简单不少。

关于可靠性的设计:

可靠性工程可能是个杯具性的活计,不过,简单的来看(何必那么复杂),其实只要能尽量缩短 服务的杯具性崩溃时间,使服务看起来一直在运作,似乎也就马马虎虎了,不过纠错还是必不可少的!

例如:

应答模式:如果客户觉得慢,完全可以重试(或许他就能连上不那么忙的服务器了呢)。

发布/订阅模式:如果订阅者错过了一些东西,或许额外加一组应答来请求会比较靠谱。

管道模式:如果服务器后的某个数据加工出了问题,或许可以在统计结果的地方给出提示。

传统的应答模式(基于tcp/ip)当遇到链路断裂或服务器停摆时,很有可能客户端还傻傻的挂着(直到永远)。就这一点而言,zeromq似乎要友好的多,至少,他会帮你重新连接试试~

好吧,这还远远不够,不过,如果再配合着一些额外的操作,其实是可以得到一个可靠的、分布式的应答网络!

简单来说,就有以下三种解决方案:

1.所有客户端连接单一、可确定的服务器。那么一旦哪里崩溃或链路断裂,简单的自检机制加上zeromq的自动重连就可以了。

2.所有客户端连接单一、可确定的队列服务器。数据都放在了队列里,应用崩溃的话,重试应该很简单。

3.多对多的连接。算是1的加强版吧,这里不行,可以连其他的试试。

额。。。其实我也有点晕了,不过,在后续章节中会逐一详解~

zeroMQ初体验-23.可靠性-懒惰的海盗模式

博客分类:

· MQ

相较于通常的阻塞模式,这里只是做了一点简单的动作来加强系统的可靠性(不再是通常性质的阻塞了)。

这种模式增加/变更了以下几点:

· 轮询已经发出的请求直到得到响应

· 在一定时间内未得到相应则重新发出请求

· 在一定次数的重试不成功之后,停止请求

相较于传统的阻塞模式,好处显而易见:在未得到答复时可以继续发出请求而不是碰到预料之外的报错之类信息。(zeromq还默默地为你做了这么件事:当链路出现问题,它会悄悄的重新建立一个~)

client:

1. require ’rubygems’

2. require ’zmq’

3.

4. class LPClient

5. def initialize(connect, retries = nil, timeout = nil)

6. @connect = connect

7. @retries = (retries || 3).to_i

8. @timeout = (timeout || 3).to_i

9. @ctx = ZMQ::Context.new(1)

10. client_sock

11. at_exit do

12. @socket.close

13. end

14. end

15.

16. def client_sock

17. @socket = @ctx.socket(ZMQ::REQ)